Introduction

Prompt engineering is an emerging skill that plays a pivotal role in the interaction between humans and AI, particularly large language models. Often, the effectiveness of an AI model is not solely dependent on the model itself, but also on how well the user can communicate with it. This chapter aims to provide an in-depth look at advanced techniques and principles for effective prompt engineering.

Advanced Prompt Engineering focuses on maximizing the effectiveness of prompts to get precise and contextually relevant responses from language models like GPT-4. It's a nuanced skill set that leverages a deep understanding of both natural language processing and human psychology. In this chapter, we will delve into various techniques to refine this art.

Prompt engineering is a critical skill when working with large language models like GPT-3 or GPT-4. This chapter aims to provide an in-depth look at advanced prompt engineering techniques, highlighting their significance, functionality, and application. The chapter will delve into several advanced methods and strategies such as few-shot prompting, chain-of-thought prompting, self-consistency, knowledge-augmented prompts, PAL, REACT, and RAG.

Topics

Introduction to Prompt Engineering

Elements of a Prompt

Principles and Tactics for Effective Prompting

Advanced Techniques

Summary

Additional Resources

Section 1: Introduction to Prompt Engineering

What is Prompt Engineering?

Prompt engineering is the art and science of crafting queries or commands that guide AI models, particularly language models, to produce desired outputs. It involves:

Creating questions, statements, or instructions.

Experimenting with different prompts to optimize interactions.

Iterative testing to refine the prompts.

Key Takeaways

Prompt engineering is an iterative process.

It plays a critical role in optimizing the output of language models.

Section 2: Elements of a Prompt

A typical prompt consists of four to five elements:

Instruction: What the model is expected to do.

Context: Background information, if any.

Examples: Optional but helpful.

Input Data: The actual data the model will work on.

Output Indicator: A marker to indicate where the output should be placed.

Key Takeaways

Examples are optional but can enhance the quality of output.

Context is important for specific types of tasks.

Section 3: Principles and Tactics for Effective Prompting

Principle 1: Write Clear and Specific Instructions

Tactics:

Use Delimiters: Separate distinct parts of the input.

Structured Output: Ask the model to produce output in a structured format, like JSON.

Condition Checks: Instruct the model to validate conditions before outputting.

Provide Examples: Known as few-shot prompting, this helps guide the model.

Principle 2: Give the Model Time to Think

Tactics:

Specify Steps: Detail the steps required for the model to complete the task.

Work Out Solutions: Instruct the model to first work out its own solutions before rushing to a conclusion.

Key Takeaways

Using delimiters and structured output can reduce post-processing.

Condition checks and self-verification are critical for accuracy.

Principle 3: The Importance of Clarity

Explanation

A clear prompt sets the stage for an accurate and relevant output. Clarity helps the model understand the context and the nature of the information being sought.

Bullet Commands/Instructions

Always specify the context when necessary.

Use concise language but don't sacrifice critical details.

Avoid ambiguity in phrasing.

Principle 4: Leveraging Temperature and Max Tokens

Explanation

The temperature setting influences the randomness of the model's output. Lower values make the output more focused and deterministic. The max tokens parameter limits the response length.

Bullet Commands/Instructions

For more deterministic outputs, set

temperatureto a low value like 0.2.Use

max tokensto limit the response to a specific length.

Principle 5: The Role of System Messages

Explanation

System messages can guide the behavior of the model throughout a session. These messages are not visible to the end-user but instruct the model on the tone, format, or other specific requirements for the conversation.

Bullet Commands/Instructions

Use system messages to set the tone or specific requirements at the beginning of a session.

Remember that system messages remain effective for the entire session unless updated.

Principle 6: Handling Multiple Questions

Explanation

Asking multiple questions in one prompt can lead to incomplete or rushed answers. However, it's essential to balance this with the need for comprehensive responses.

Bullet Commands/Instructions

If multiple questions are necessary, number them for clarity.

Consider using separate prompts for each question to ensure detailed responses.

Principle 7: Prompting for Iterative Responses

Explanation

Sometimes, you might need the model to think step-by-step or iterate over a concept. You can prompt the model to build upon previous responses for a more nuanced answer.

Bullet Commands/Instructions

Use phrases like "Can you elaborate?" or "What's the next step?"

Reference the model's previous answers to build a coherent narrative.

Section 4: Advanced Techniques

Few-Shot Prompting: Provides context to the model via examples.

Thought Sharing: Allows the model to "think aloud," improving transparency.

Self-Consistency: Ensures the model's outputs are consistent when the same prompt is used multiple times.

Knowledge Generation: Techniques to make the model generate new knowledge based on existing data.

Programmatic Language Models (PALs): Using the model in a programmatic way for specialized tasks.

Reason and Act (REACT): A framework for reasoning and action.

Corrective or Augmented Generation (RAG): Fine-tuning the model's outputs based on user feedback.

Few-Shot Prompting

Explanation

Few-shot prompting involves giving a language model several examples to guide it toward specific types of answers.

Ideal for specialized tasks.

Commands/Instructions

Provide initial examples that closely match your desired task.

Follow with the prompt for the task you want the model to perform.

Few-shot prompting involves providing the model with multiple examples to guide it towards the kind of responses you're looking for. This method is particularly useful for specialized tasks where the model may require more context to generate appropriate answers.

Example

If you want the model to translate English sentences into French, you might provide a few examples first:

Translate the following English sentences to French:

1. "Hello" -> "Bonjour"

2. "Goodbye" -> "Au revoir"

3. "Translate: I love you"Include 2-3 examples before asking your main question.

Make sure examples are closely related to the question you're asking.

Clearly separate the examples from the actual question.

Chain-of-Thought Prompting

Explanation

This method is useful for complex reasoning tasks.

The model is asked to think step-by-step explicitly.

Commands/Instructions

Begin the prompt by asking the model to think step-by-step.

Pose the complex question or task.

Chain of thought prompting involves asking the model to think in a step-by-step manner, often explicitly stating that within the prompt. This is useful for tasks requiring complex reasoning or multi-step solutions.

Explain how to solve the equation \(x^2 - 4x + 4 = 0\) step-by-step.Use phrases like "step-by-step" or "walk me through" in your prompt.

Be explicit in asking the model to think sequentially.

Self-Consistency

Explanation

Focuses on generating consistent answers across multiple examples.

Useful for eliminating inconsistencies in model outputs.

Commands/Instructions

Provide multiple examples for the same kind of question.

Ask the model to maintain consistency across these examples.

Self-consistency involves asking the model for multiple examples and expecting consistent answers. This is useful when you want to ensure the model doesn't provide contradictory or inconsistent information.

Provide definitions for the following terms, and make sure they are consistent with each other: 1. Machine Learning 2. Artificial Intelligence 3. Neural NetworksAsk for multiple related outputs in a single prompt.

Use a phrase like "make sure they are consistent with each other" to guide the model.

Knowledge-Augmented Prompts

Explanation

Additional contextual information is fed to the model.

The query is then based on this additional context.

Commands/Instructions

Input the additional knowledge context.

Pose questions or tasks that rely on this extra information.

This technique involves feeding the model with additional context or data, and then querying it based on that context. This is useful when you have specific, contextual information you want the model to consider.

Given that the speed of light is \(3 \times 10^8\) m/s, calculate the energy of a photon with a wavelength of 500 nm.Provide all necessary background information upfront.

Make your query specific to the additional context provided.

PAL (Programmable Agents for Language Models)

Explanation

Offloads some tasks like calculations or logical reasoning to an external program.

Commands/Instructions

Define the external program.

Interface the language model with this external agent for specialized tasks.

PAL involves offloading tasks to an external program, often for calculations or logical reasoning tasks. This allows for more accurate and complex operations than the model could achieve alone.

Calculate the factorial of 5 using Python code.Specify the task you want offloaded and the tool to be used.

Make sure to define the task clearly so that it can be programmatically executed.

REACT (Reasoning and Acting)

Explanation

The model both reasons and takes actions iteratively.

Can interact with external systems to refine its actions.

Commands/Instructions

Implement the REACT agent within the model.

Pose questions that require iterative reasoning and action.

REACT involves the model both reasoning and taking actions iteratively. It can interact with external systems, gather more data, reason over it, and refine its actions.

Connect to a weather API, retrieve the current temperature, and recommend an outfit based on it.Clearly define the series of actions you want the model to take.

Specify any external systems the model should interact with.

RAG (Retriever Augmented Generation)

Explanation

A two-step process involving knowledge generation and retrieval.

Useful for sourcing specific information from a large corpus.

Commands/Instructions

Set up the knowledge generation system (Generator).

Implement the retrieval system (Retriever).

Query the model based on this two-step architecture.

RAG involves a two-step process: one system generates knowledge, and another retrieves relevant information from that generated knowledge to answer queries.

Generate a summary of the causes of World War I, and then answer the question: "What was the role of alliances in causing World War I?"Use a two-part prompt: one for generating knowledge and another for querying it.

Make sure the generation and retrieval tasks are closely related for coherent outputs.

Challenges and Considerations

Fine-tuning vs. RAG: Discusses the complexity and cost involved in fine-tuning and how RAG offers a more flexible and less expensive alternative.

Safety Concerns: Prompt injection, jailbreaking, and leaking are issues to be considered for secure prompt engineering.

Mastering the art of advanced prompt engineering can significantly improve your interactions with language models. By understanding and implementing these techniques, you can optimize for precision, context relevance, and depth in the model's responses.

Prompt engineering is a multifaceted skill that requires a deep understanding of both the AI model and the task at hand. By employing advanced techniques and following best practices, one can significantly improve the performance and reliability of AI models.

The chapter concludes by emphasizing the importance of mastering these advanced techniques for effective interaction with large language models. It also advises considering both the technological and ethical aspects, including prompt safety.

Additional Resources

OpenAI Hackathon on Prompt Engineering

Research papers on Few-Shot Learning

Online tutorials on advanced prompt techniques

Microsoft's Prompt Engineering Techniques

OpenAI’s Best Practices for Prompt Engineering

Deep Learning AI's Prompt Engineering for Developers

Prompt Engineering Institute

Prompt Engineering Guide

Slides

Slide 1: Introduction to Advanced Prompt Engineering

Content:

Aim: Provide an in-depth look at advanced techniques and principles for effective prompt engineering.

Importance: Maximizing the effectiveness of prompts for precise and contextually relevant responses.

Image Description: A diagram showcasing the interaction between humans and AI.

Speaker's Narrative Notes:

Aim: "Our aim today is to go beyond the basics and delve into the advanced techniques of prompt engineering."

Importance: "This is crucial because the quality of the interaction you have with an AI model is often determined by the quality of your prompts."

Slide 2: What is Prompt Engineering?

Content:

Definition: The art and science of crafting queries or commands for AI models.

Key Takeaways:

Iterative Process

Critical Role in Optimizing Output

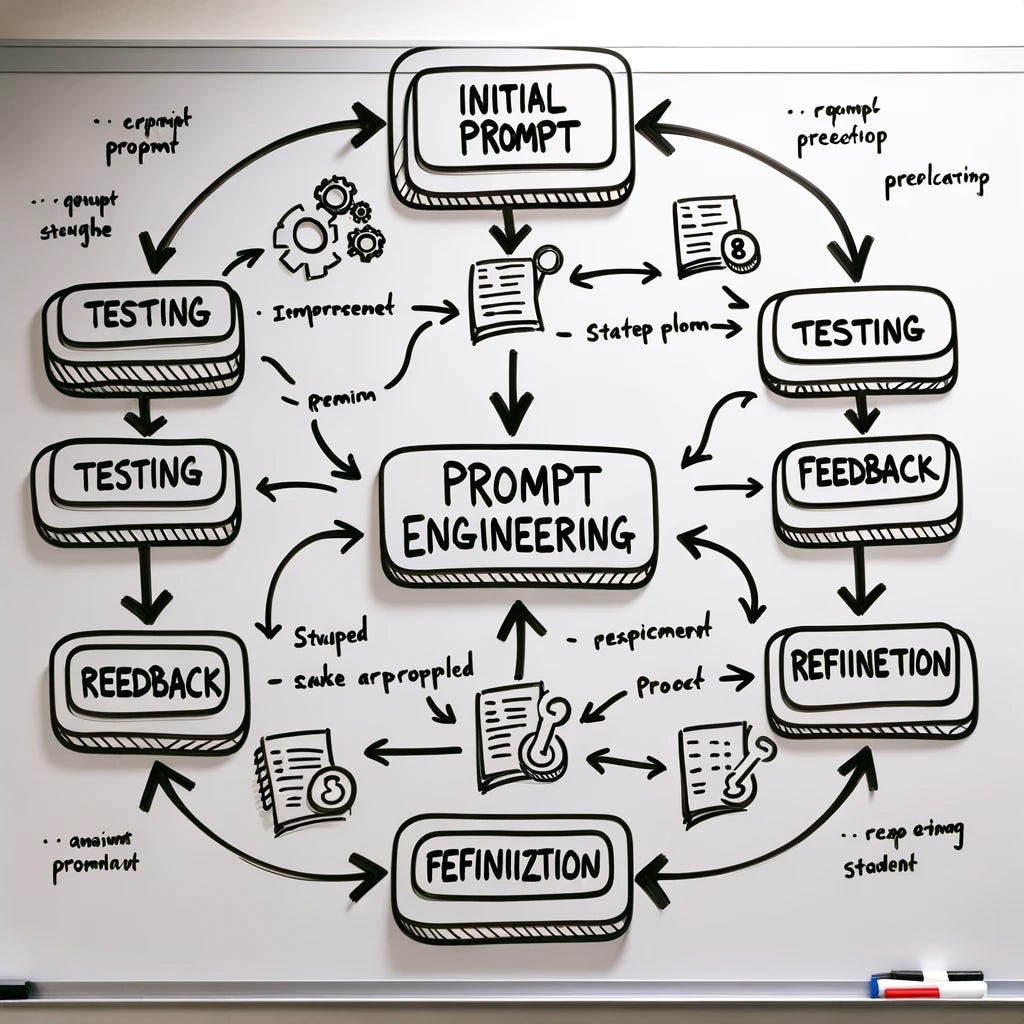

Image Description: A flowchart representing the iterative process of prompt engineering.

Speaker's Narrative Notes:

Definition: "Think of prompt engineering as both an art and a science. It combines creativity with methodical testing."

Iterative Process: "It's not something you get right the first time; it involves a cycle of refinement."

Critical Role: "And why is this important? Because the right prompt can make or break your interaction with the model."

Slide 3: Elements of a Prompt

Content:

Components of a Prompt:

Instruction

Context

Examples

Input Data

Output Indicator

Key Takeaways:

Examples can enhance quality

Context is important

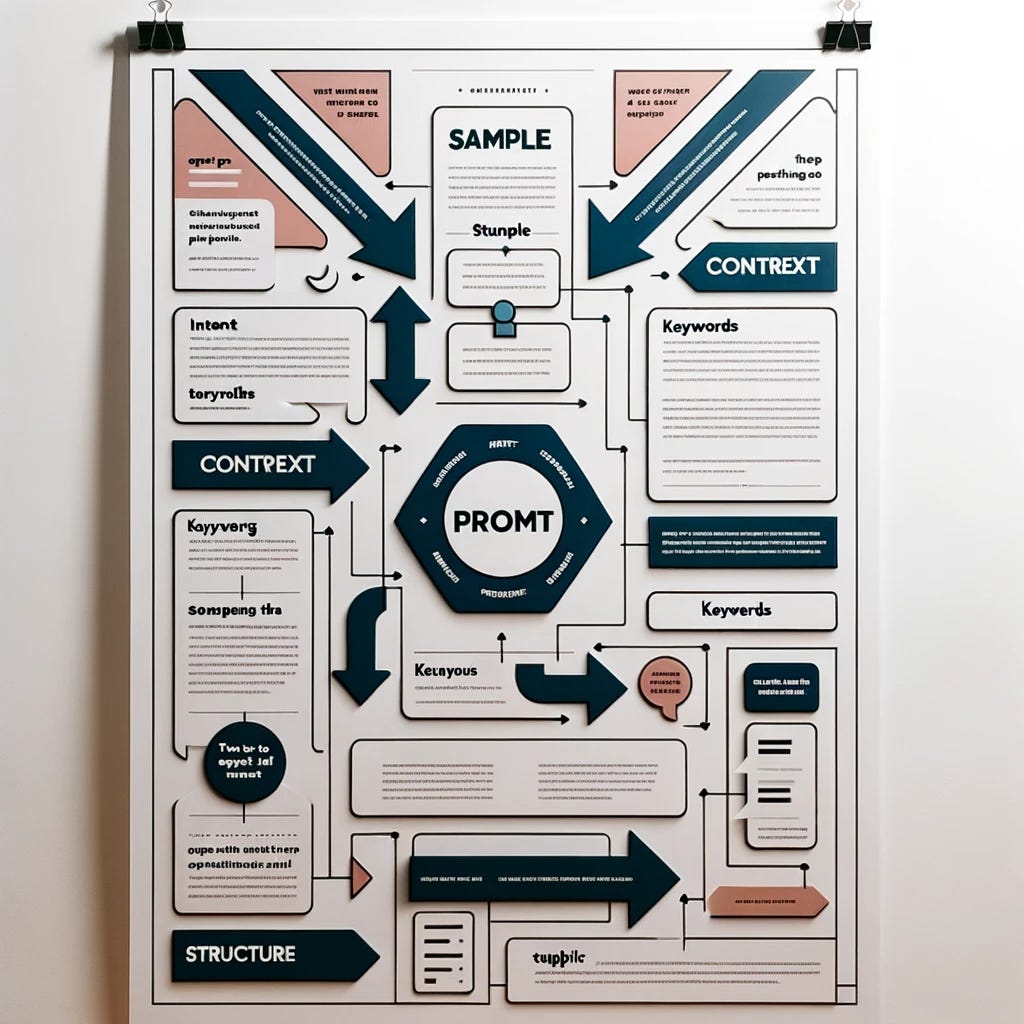

Image Description: An infographic breaking down a sample prompt into its elements.

Speaker's Narrative Notes:

Components: "A prompt is composed of various elements, each serving a unique function."

Examples: "For instance, including examples can often guide the model better."

Context: "And never underestimate the power of context; it can make your prompt far more effective."

Slide 4: Principles for Effective Prompting (Part 1)

Content:

Principle 1: Write Clear and Specific Instructions

Use Delimiters

Structured Output

Condition Checks

Provide Examples

Principle 2: Give the Model Time to Think

Specify Steps

Work Out Solutions

Image Description: Two balance scales, one labeled "Clarity" and the other "Time," representing the balancing act between clear instructions and giving the model time.

Speaker's Narrative Notes:

Principle 1: "The first principle focuses on clarity and specificity. For example, using delimiters can help segment your prompt for easier processing by the model."

Principle 2: "The second principle is about patience. Don't rush the model; give it specific steps to follow and time to think."

Slide 5: Principles for Effective Prompting (Part 2)

Content:

Principle 3: The Importance of Clarity

Always specify the context

Use concise language

Avoid ambiguity

Principle 4: Leveraging Temperature and Max Tokens

For deterministic outputs, use low temperature

Use max tokens to limit response length

Image Description: A magnifying glass focused on a clear crystal, symbolizing clarity, and a thermometer next to a ruler, symbolizing temperature and length.

Speaker's Narrative Notes:

Principle 3: "Clarity isn't just about what you say; it's about leaving no room for doubt. Be explicit, but also be concise."

Principle 4: "Sometimes, you need to control how the AI thinks. Temperature and token limits are your levers for that control."

Slide 6: Principles for Effective Prompting (Part 3)

Content:

Principle 5: The Role of System Messages

Set tone or requirements at session start

Effective for entire session

Principle 6: Handling Multiple Questions

Number questions for clarity

Use separate prompts for detail

Image Description: A speaker icon and a checklist, representing system messages and multiple questions.

Speaker's Narrative Notes:

Principle 5: "System messages are like your opening act; they set the stage but stick around influencing the entire session."

Principle 6: "When you have multiple questions, make it easy for the AI to give you multiple answers. Numbering or separating prompts can help."

Slide 7: Principles for Effective Prompting (Part 4)

Content:

Principle 7: Prompting for Iterative Responses

Use phrases like "Can you elaborate?"

Reference previous answers

Image Description: A staircase, symbolizing the step-by-step or iterative nature of some tasks.

Speaker's Narrative Notes:

Principle 7: "Sometimes you need the model to think in iterations, to build upon what it has already said. Phrases like 'Can you elaborate?' can help."

Slide 8: Advanced Techniques (Part 1)

Content:

Few-Shot Prompting

Provides context via examples

Ideal for specialized tasks

Thought Sharing

Allows the model to "think aloud"

Improves transparency

Image Description: Two contrasting images: one of a bullseye, symbolizing the focused nature of few-shot prompting, and another of a thought bubble, symbolizing thought sharing.

Speaker's Narrative Notes:

Few-Shot Prompting: "Few-shot prompting is like giving the model a warm-up round with examples. It's especially useful for specialized tasks."

Thought Sharing: "Ever wish you could see what the model is 'thinking'? Thought sharing aims to make the model's thought process more transparent."

Slide 9: Advanced Techniques (Part 2)

Content:

Self-Consistency

Generates consistent answers

Eliminates inconsistencies

Knowledge Generation

Generates new knowledge based on existing data

Useful for specialized tasks

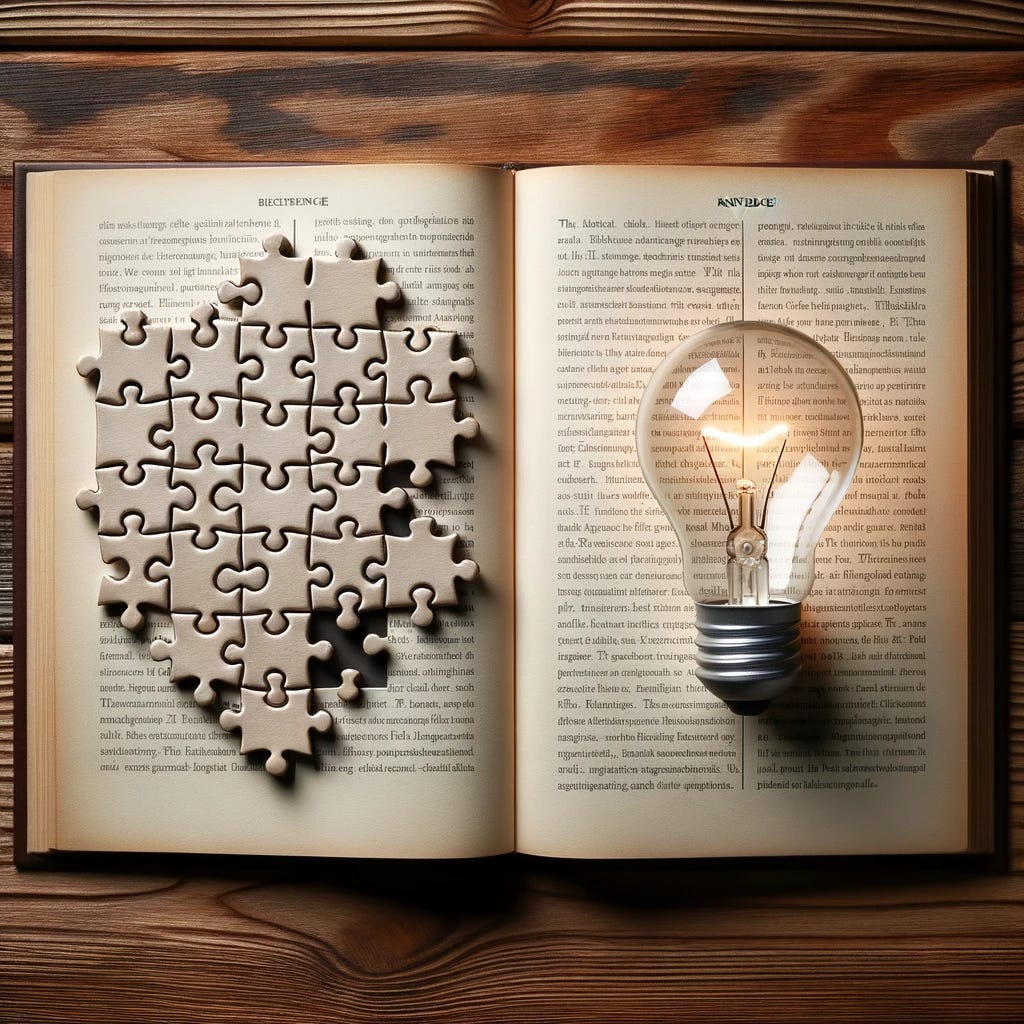

Image Description: A puzzle with all pieces fitting perfectly, symbolizing self-consistency, and a light bulb over a book, symbolizing knowledge generation.

Speaker's Narrative Notes:

Self-Consistency: "Imagine asking for directions and getting conflicting answers. Frustrating, right? Self-consistency aims to eliminate such inconsistencies."

Knowledge Generation: "Sometimes, you need the AI to go beyond what's explicitly provided and generate new insights or knowledge."

Slide 10: Advanced Techniques (Part 3)

Content:

PAL (Programmable Agents for Language Models)

Offloads tasks to an external program

Useful for specialized tasks

REACT (Reasoning and Acting)

Involves iterative reasoning and action

Can interact with external systems

Image Description: A robot arm holding a wrench, symbolizing PAL's task offloading, and a computer connected to various icons like a weather cloud and a database, symbolizing REACT's external interactions.

Speaker's Narrative Notes:

PAL: "PAL allows you to interface the language model with external programs, essentially extending its capabilities."

REACT: "REACT takes it a step further. It not just reasons but also acts, even interacting with external systems for more refined actions."

Slide 11: Advanced Techniques (Part 4)

Content:

RAG (Retriever Augmented Generation)

Two-step process: knowledge generation and retrieval

Useful for sourcing information from a large corpus

Image Description: A search icon and a book, symbolizing the retrieval and generation aspects of RAG.

Speaker's Narrative Notes:

RAG: "RAG is like having a librarian and a researcher in one system. It first generates the knowledge and then sifts through it to answer your specific query."

Slide 12: Challenges and Considerations

Content:

Fine-tuning vs. RAG

Safety Concerns: Prompt injection, jailbreaking, and leaking

Image Description: A caution sign next to a gear, symbolizing the challenges and considerations in prompt engineering.

Speaker's Narrative Notes:

Fine-tuning vs. RAG: "Fine-tuning is resource-intensive. RAG offers a flexible, less expensive alternative."

Safety Concerns: "As with any technology, there are safety considerations. Being aware of these can help mitigate risks."

Slide 13: Conclusion

Content:

Importance of mastering advanced techniques

Consideration of both technological and ethical aspects

Image Description: A graduation cap next to a balance scale, symbolizing the mastery of techniques and the balance of ethical considerations.

Speaker's Narrative Notes:

Importance: "Mastering these techniques is not just about better answers; it's about effective and ethical AI interactions."

Ethical Aspects: "Technology is not devoid of ethics. As we strive for effectiveness, let's also consider the ethical implications."